Almost no one has built load testing correctly. Worse yet, our collective mental models seem to be damaged. I know these claims sound preposterous on the surface, but I hope by the time I’m done, I will convince you they’re true. As someone who has built my own load test systems, I was taken aback when I discovered how flawed my thinking was. However, before I demonstrate the damage, let me start with a quick walk-through of how I think our collective model works.

We start with an environment that handles some sort of concurrent usage—perhaps a website or an API. Depending on exactly what type of load testing is being done, this might vary a little, but in general we have a static set of users who use the system over and over again. Some load test systems call these “threads” or “virtual users.” Each user generates a request, waits for a response, and then makes more requests until the test script is completed.

To generate load, the system will run the test script over and over until some “done” condition is hit. The load test script may have some customization, such as the users varying their actions a little or pausing between requests. The load may have several different testing purposes, like memory constraints or whether the system will remain stable over a long period of time. Once the test has been completed, you might examine data such as the average response and the fiftieth percentile.

While I don’t think this model of load testing is broken for all possible load test goals, it certainly is for the vast majority of scenarios I have worked on. Have you found any issues yet?

Traffic in the Real World versus a Test

Stepping back from computers for a moment, imagine you are in a car, driving on the highway. You’re cruising at the legal speed limit, and there are about nine cars nearby. We might say there are ten users of this particular stretch of highway at this particular time. We could claim that in normal conditions, the highway can process ten cars per second on the given stretch of road. Then an accident happens. You go from a four-lane highway to a single lane. You and the other cars nearby can no longer move at ten cars per second. Traffic is making it difficult to navigate, so you slow down to half the legal speed limit. Assuming that it takes an hour for the wreck to be cleared away, what do you think will happen?

In the real world, you would see a traffic jam. They might close down the highway to allow workers to clear the wreck. This certainly is going to make travel slow. Assuming that traffic remained constant, ten additional cars would appear at the traffic jam site every second, while fewer would leave.

If our load test model were parallel to the real world, it too would keep generating traffic in spite of the traffic jam. However, our load test only has a maximum of ten users it can test with. That means the system would never see the load it would in a real-world scenario. Your ten users would be going at half-speed, for sure, but the analogous additional cars would never appear. You would never see a traffic jam. You couldn’t; you only have a maximum of ten users. In a sense, your load test backs off the system, letting the system recover more quickly. After all, there’s no need to shut down the highway if you only have ten cars waiting. Azul Systems cofounder and CTO Gil Tene coined the term coordinated omission to describe the problem.

Lying to Ourselves with Data

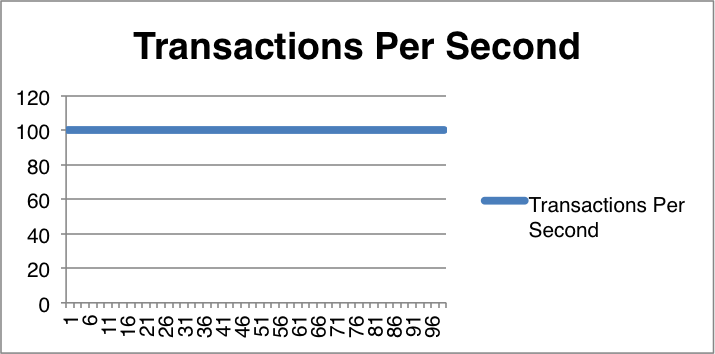

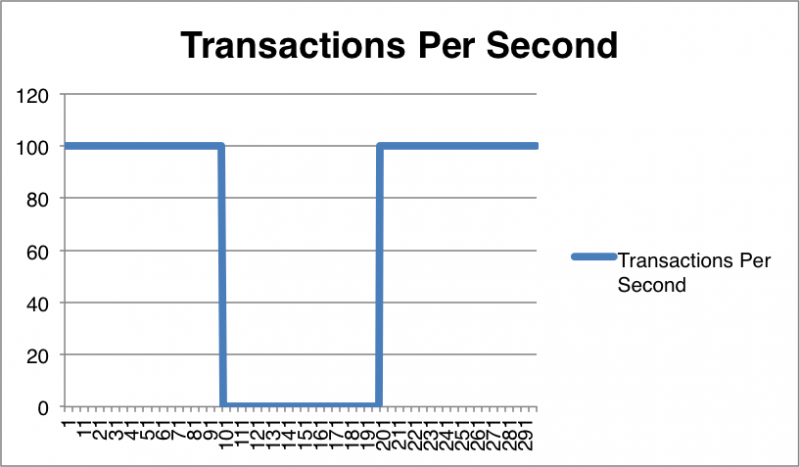

Worse yet, the statistics you gather would be completely wrong. Imagine you have a load test running for a nice round one hundred seconds, and for each second you have a hundred transactions from a single user. That means you should see one transaction per ten milliseconds, or ten thousand transactions by the time the test is done.

That system is not only very fast, but our stats also would demonstrate how good it is. Now, instead of one hundred seconds, imagine a three hundred-second load test, with the same parameters as last time. The first hundred seconds you would have ten thousand transactions, just like the previous example. Then, you unplug the network cable for a hundred seconds. Finally, the cable is plugged back in again and the test runs just like normal. For two hundred seconds, the test ran perfectly normally, but for the middle one hundred seconds you had no transactions, meaning your total throughput was twenty thousand transactions.

What do you think the median is in our load test results? Ten milliseconds.

How about the 50th percentile? Ten milliseconds.

Maybe the 99th percentile will show the trouble? Ten milliseconds.

Perhaps with a bit more precision—say, the 99.99th percentile? Also ten milliseconds.

In most load test systems, you’d never know there was a problem, unless you look at either the max time or the total number of transactions. We don’t even know how bad it would get because we don’t know how the system would look if you properly piled up transactions, like with the traffic jam. Your load test will look stellar, but the system would actually be behaving badly. You are coordinating with the system, allowing it to rest when it is at its worst.

This might be a real-world scenario for some systems, as people do abandon webpages when they don’t load quickly. However, that sounds a lot like saying it’s a good thing long lines convince customers to not buy our product.

How Can This Be Fixed?

Now that you see the problem with our existing model, what do we do about it? While the ideal solution would be to fix the tools, that is often not practically possible. I know Gatling and wrk2 have made efforts to fix the coordinated omission issue, but not all tools are open source or technologically flexible enough, or you may not have the expertise to change the tool. Replacing the tool with one that works better is possible, but that also has costs. There is another solution.

If you can’t fix the tool, you can try to fix the data. Using HdrHistogram calculates roughly how bad things would get if you had continued to commit typical load. It does not add that additional load onto the system, but it includes the best case for what the system would have looked like with that load in the performance results. That way you have some idea of just how badly things went. This will give you a more realistic lower bound on the system load.

No matter how you fix the problem, ultimately it is our responsibility to understand what our tools do as well as what the results mean. All too often we abdicate our responsibility to the tool, letting it become the expert and just believing the results it outputs. If we don’t bother to learn a little about how our tools work, understand the internals, and, in this case, do the math, we may give our stakeholders bad advice—and, ultimately, our users a much worse experience.

User Comments

I also would recomment to read this :

http://www.perfdynamics.com/Manifesto/USLscalability.html

It's not that difficult to make a model. If your application is using various APIs, I would first recommend to model the single APIs. When this is done you can make combinations.

You should use production data, to determine the load and API usage, and try to make a model.

For example, Blazemeter allows to create a multi-plan, in which you can use multiple tests which can be started on exactly the same time.

I believe you are speaking of something different than I am. I am speaking of mental models and load test outputs rather than the practical application of building a mathimatical model for load testing.

Testing can informally be thought as gathering information for stakeholders. If the data or information I gather is accurate but not described correctly, that means there is a difference between my mental model and the tools I am using, which means I may tell my stakeholders the wrong thing. To give a practical example, I might do good testing on the wrong build version and report everything is okay. That report would be totally wrong, even though I thought I was doing everything right. My mental model of what I was doing and what I actually tested did not match.

In this case, I think I have found a case where our collective mental model is wrong (although some may know better). Often times in testing, we write up bugs when we notice some difference between our mental model and the actual results. Think of this article as a sort of bug report. Since I can't write a bug for every load test system in existence, I am telling as many stakeholders (fellow testers) as I can of the limits of the existing system.

This "bug report" as it were is where I (and I think others) assumed that load tests would generate constant traffic and thus my statistical model of 'worst case' would be accurate. If I said "have 30 users hit the system for the next 20 minutes" I expect 30 users constantly using the system. I never considered that the threads that simulate the users are blocking in many cases. That is to say, each user has to wait for the HTTP request to come back. If the system normally responds in 1 second but starts to take 10 seconds, the amount of traffic the system handles in total is lower than expected. That means the total traffic data I got and what I though I was doing were not the same. In typical testing tradition, I documented this behaviour and I documented some workarounds.

From what I can tell, you are describing more Load Test 101 usage and how to build models on how much traffic the system normally experiences, etc. Those are useful items, but not the point of the article. Hopefully that helps to clarify the point.

- JCD