Most people don't fully understand the complexities and scope of a software performance test. Too often, performance testing is assessed in the same manner as functional testing and, as a result, fails miserably. In this four-part series we will examine what it takes to properly plan, analyze, design, and implement a basic performance test. This is not a discussion of advanced performance techniques or analytical methods; these are the basic problems that must be addressed in software performance testing.

This is the third part of a four-part series addressing software performance testing. Parts one and two can be accessed below.

The general outline of topics is as follows:

- Part 1

- - The role of the tester in the process

- - Understanding performance and business goals

- - How software performance testing fits into the development process

- Part 2

- - The system architecture and infrastructure

- - Selecting the types of tests to run

- Part 3

- - Understanding load (operational profiles OP)

- - Quantifying and defining measurements

- Part 4

- - Understanding tools

- - Executing the tests and reporting results

Understanding Load

Performance testing refers to the behavior of the system under some form of load. We need to examine exactly what we mean when we use the term "load." In the simplest view, load can be expressed as a formula: Load = Volume/Time. The problem is, what does the volume comprise and over what time period? This is a simple concept known as an "operational profile." (OP).

Load is always relative to a specified time interval. The volume and mix of activities can change dramatically depending on time. To understand load more effectively we need to define what time interval is important to properly assess load (volume).

First, we need to determine the time period we are interested in, sample it, and analyze the content of those samples. People, devices, etc., tend to work in patterns of activities. Those patterns have a fixed or limited duration. Each sequence of events forms an activity pattern that typically completes some piece of work.

To analyze behavior, we typically look at short time periods, one day, one hour, fifteen minutes, etc. I have found that most people work in an environment that is in constant flux-chaos, if you prefer. Embedded application testers have a slight advantage here, devices (mechanical or electronic) are not as easily distracted or interrupted as humans.

The selection of the time period is dependent to some degree on the type of application being tested. I use an hour as a base and then make adjustments as needed. If a typical session for a user or event in our sample is fifteen minutes, then each one-hour sample now contains four session samples. We would use this information to create the necessary behavior patterns using our load generation tool. This would have to be adjusted to a general activity number (per user, per hour) for a mainframe or client/server application, as those devices typically connect to the application (network) and remain connected even though they may be on but inactive. This problem may also occur for certain types of communications devices such as BlackBerries, which are always on but may not be doing any activity other than polling.

Most elements we want to measure vary based on known and unknown factors. This means we cannot sample or measure something once; we must sample it several times in order to compute a meaningful set of values.

How many samples or measures do we need? Table 1 shows a rough rule of thumb for the relationship between sample size and accuracy of the sample contents. You will notice this is very similar to what you see in commercial polls (sample more than 1,000 and the margin of error is +/- 3 percent).

Sample Size | Margin of Error |

30 | 50% |

100 | 25% |

200 | 10% |

1,000 | 3% |

Table 1

These numbers represent the number of times you have to sample something to gain the appropriate level of confidence in the data (margin of error). If you sample the specified time period thirty times your data will contain a margin of error of +/- 50 percent.

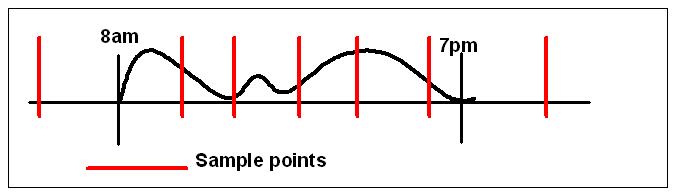

As I noted earlier, activity changes over time. If you sample randomly, you may miss critical data or you may hit only the high or low levels of activity. Some time periods have little or no activity while others have peaks. As we see in figure 1, random samples may skew the measure we get.

Figure 1

Not only do we have to be concerned with sampling at the appropriate intervals within the time period we are concerned with we also must be aware of overlapping periods of activities. If the activity on a system or application comes from several time zones, as shown in figure 2, there could be dead zones in the activity that would again skew the samples.

Figure 2

The gray areas represent time intervals where there is no or very limited activity in the time period for the time zone indicated. Including these dead zones may alter the behavior patterns we are trying to identify, causing us to create an invalid test.

Not only do we need to sample we also need to determine from where we will get those samples. This will depend on the nature of the application or system being tested. New systems or applications may or may not have history available unless they are migrating from another application or system.

There are several possibilities for gathering the necessary information about the behavior of the users or devices.

- Existing systems can provide useful information.

- Similar applications can serve as a model. One Web application's activity pattern may be similar enough to be used as a model. The question is, are the characteristics really similar?

- Industry statistics are available from many industries and applications. These tend to be generic but may serve as a good base model. There are organizations that specialize in collecting and selling this type of information.

- Marketing and management projections provide information, but care must be taken as these are projections not facts and they may be overly optimistic.

Once we have our samples, the next step is to analyze the content and determine the number of users or events and the various behavior patterns and the level of active and passive users or events. The goal of a performance test is to simulate the real world (create chaos). To do this, we need accurate samples to determine the level of activity during the specified time period.

Not only should the load size (level of activity) be estimated from the samples but a profile of the events or users that make up the load also needs to be created. Each sample will contain a mix of activity generators, including:

- Users or customers

- Devices

- Systems

- Applications

- Sensors

- Etc.

One of the key questions will be how many different types of users or events do we have? There may be a variety of activities performed by each type of user or event. Therefore, we must have a fairly accurate picture of both the number and types of each user or event. Many types may do the same activities but they have variations, such as the frequency of activities and the distribution of activities based on delays (user think times, latency, etc.).

Several different sources of activities may have to coexist on a system or application, e. g., the mix of activities on a network (HTTP, FTP, VoIP, SMTP, etc.) may come from multiple sources. The time it takes a client or event to respond to an application may have a significant impact on the number of clients or events an application can support. These factors depend on the type of application or system being tested and may include:

- Think times (human users)

- Latency and jitter (networks)

- Seek times (data storage devices)

- Processing time (applications)

- Driven by CPU, memory, etc.

Other factors also may affect the activity patterns on a system or application. Newer systems and applications may have to deal with a large number of "pervasive and persistent" devices, such as BlackBerries. These devices are always on (consuming some resources such as session id, etc.) but may be passive in terms of actual activity.

These factors need to be accounted for in assessing and understanding the concurrency requirements for the application or system. Regardless of how many physical devices are attached to a system or application, they're not all doing something at the same time.

In any particular period, we can have several possible types of distribution of users. All sessions can occur in the same time period, leaving the remainder of the time idle, as shown in figure 3, example 1. All activities or sessions can be evenly distributed over the time period, as shown in figure 3, example 2.

Figure 3

The reality of either of these models existing is fairly remote. Most applications or systems do not operate in these perfect types of models. In reality, activities will be randomly distributed over any time period, as shown in figure 4. Some users or devices will be active and some will be idle. There will be overlapping sessions, which start in one time segment and end in another. We refer to this as think time when dealing with human elements and delay time for non-human-driven devices.

Example 3 Random distribution (Chaos)

Figure 4

Now that we have the load (volume) and the activities that constitute the load, we need to define the patterns to be used by our load generation tool. As noted earlier, users do not work in fixed, steady patterns and sequences; each sequence of events that constitute a session needs to be analyzed and a set of variations created.

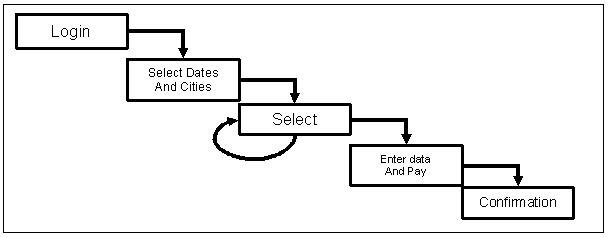

Let's look at a simple sequence. Figure 5 shows a simplified diagram of a user purchasing an airline ticket using an application.

Figure 5

The purchase sequence could have several patterns, including:

- Login, dates, itineraries, data & payment, conf

- Dates, itineraries, login, data & payment, conf

- Dates, itineraries, create account data & payment, conf

- Login, dates, itineraries, data, deferred payment, conf

Each pattern will have variations due to natural delays, think times for humans, clock delays for devices, etc. When implementing these patterns in our tool, we will need to create several scripts with variable think times, for example:

- <3>Login, <10>dates, <14>itineraries, <10>data & payment, <6>conf

- Login, <12>dates, <20>itineraries, <8>data & payment, <6>conf

- <10>Login, <3>dates, <9>itineraries, <14>data & payment, <6>conf

- represents delay in seconds

When generating the test scripts and test data, it will be necessary to ensure the scripts are repeatable. If a script adds a record to the database and uses a unique key, then the script will have to be modified to use a data source of different keys for each virtual client script. Otherwise, all scripts after the first one will error out and you will not get an accurate picture of system resource usage.

This will also require that the database contain enough records that the scripts can access multiple records distributed across the data space. This helps to avoid creating artificial improvements in database performance by having all scripts access and update the same record set.

The first step is identifying the overall data requirements. There can be multiple data sources that have to be coordinated when running a test on a complex system or application. Data used in the scripts may have to be coordinated with all these data sources. The possible data requirements may include:

- Creating data for customizing the scripts

- Local database loading

- Web database files

- Legacy database files

- Back office data impacts

The next step is identifying the sources of data. Where will the data come from and will there be sufficient volume and variety to meet our test requirements? During the execution phase of the testing, when changes are made to various aspects of the system, we may have to update these data elements. There are several possibilities for acquiring the necessary data. Some of these, such as production systems, may not be available as they do not exist or have restricted access (legal, etc.). Possible data sources include:

- Production systems

- Data generation or creation tools

- Manual creation of data

Caution must be exercised as the tests may affect support and back office applications and databases, as well.

Any changes to the system or application as a result of the tuning and fixing activities may require a regression test be re-run and the affected scripts and data will have to be regenerated or deleted and recreated.

The creation of scripts and the data necessary to make them flexible will be affected by the number and types of tests we want to run and how our tool allows us to execute various test types. Many tools will allow a test to start out with a small number of "virtual users" and then scale the number of users by a defined amount until a specified level has been obtained. If this is one of the tests to be executed, the initial scripts used by the first set of virtual users will get repeated many more times than the scripts used by later virtual users. Therefore, enough "data" records have to be created so that the initial scripts do not begin to error out as the longer, more complex tests are executed.

Some tools allow for script synchronization so that a large number of users do not start all at once. Other tools allow for dynamic adjustments to the number of users running. Whichever capabilities the tool has must be managed relative to the types of scripts and data requirements needed to maintain the integrity of the load profile and mix of activities. Creating the necessary scripts and data and modifying them to be reusable will depend on what type of work load profile was created and the number of users to be simulated.

Finally, any additional activities need to be considered to accurately reflect the performance degradation caused by network and other application loads. This is often referred to as "background noise" or "white noise." Unless the various production servers are going to be dedicated to supporting a single application (the Web server may be dedicated but the database server may be shared with several client/server applications). We may have to ensure that the servers in the system test environment are loaded with appropriate background tasks to compensate for the other tasks that they will need to support in the production environment. Elements to consider include other applications that will be running on the production servers once the application under test moves into production and other network traffic that will consume network bandwidth.

If these other applications and events exist in the production environment and they are not included in the test, the validity of the test is further reduced.

Quantifying and Defining Measurement

Now that we understand the problems related to "load," let's look at the concept of measurement. In the previous parts of this series, we examined the issues that need to be addressed so we can focus our efforts correctly and so that we know where to look for problems.

There are many things we can measure and each measurement provides critical information about potential problems during test execution. What we measure will be based on several factors:

- The problems we expect to encounter

- The potential bottlenecks

- The types of loads we are running

- The general goals of the test (as defined earlier)

The measurements will help determine the number and types of monitoring tools we will require (tools will be addressed in part four). Below is a list of common measures and why each may be important. This is not a complete list, but it covers many of the common problem areas.

- Unique sessions-This is a measure that tells the tester how many sessions were actually initiated on the server. This can be generated by the application, or the session ids can be assigned by the server or system. The tester attempts to determine how many sessions are desired per time period as part of the operational load profile definition.

- Transactions per second-This measure can represent many different things depending on how the term is used and defined.

- From a business perspective, a transaction may comprise multiple pages or screens. Load tools may call this a round, agenda, or sequence.

- From a functional perspective, a tester may define a transaction as a single screen, page, or event.

- In the case of tools, the transaction may represent a single HTTP request.

- It is important to ensure that the definitions are all in agreement or the test may be misleading.

- MB per second-This is typically used to assess the capacity or capability of a network or connection and is usually collected by the network administrator as the test is running. The desired volume is determined by the number of users created and the level of activity each user or event generates.

- Activity on the database-Frequently collected by the DBA, this is usually not a specified level or target but is collected to determine if the database is a potential bottleneck during the load test. Databases have a tendency to have problems with indexes, distribution of data, access conflicts, etc.

- Memory and CPU usage-These levels are usually defined by the manufacturer of the infrastructure. We collect these measures using the monitors provided by the various system or infrastructure vendors. Many of these monitors can be accessed by the load generation tool. The information is pulled back to the tool and is integrated with the load and other information for analysis and reporting.

- Number of concurrent users and number of sessions per hour-These are measures defined as part of the operational load profile created by the tester. These numbers can be calculated by the tester based on the number of virtual users created. Most load generation tools provide details about what was generated and how.

All these measures are gathered from the various sources by the tester and mapped together into a report that can be used to determine where possible bottlenecks exist. This aggregated information can be used by the technical staff to diagnose problems and make corrections.

After all this, where the measures are generated, who defines the measures, and how they are collected depends a lot on the measures and what their intent is.

Every measure will require some form of monitoring tool to gather the data. Monitors will utilize some of the server's resources and will therefore degrade the performance of the server. While some degradation cannot be helped, it is important to figure out how much degradation is attributable to the monitor. This has to be factored into the predictions for the server's performance once the monitoring software is turned off or removed. Some monitors, such as network monitors, may not be turned off in production as they are a part of normal operations.

Whether defined by an external source or as part of the internal procedures of an organization, we need to have these targets defined as early as possible. All measurements should have a maximum acceptable limit defined for sustained usage. Once this level is reached, this resource is now under constant stress. For limited periods, this limit may be exceeded but should never remain at or above the defined level to keep the system from thrashing. Many vendors specify such limits. For example;

- Max sustained memory usage = 75%-80%

- Max sustained CPU usage = 70%-75%

- These ceilings are typically designed to keep the system from thrashing

Response times are probably the most difficult measure to define when dealing with end-to-end events or transactions as perceived by a user or client. Response time for devices may be easier to define as they deal with mechanical or electronic elements.

Not all events or transactions require the same response time. The analysis performed in creating the operational profile should help us determine both critical and non-critical events or transactions. We may even decide to define different categories of response times such as general response times for groups of events or transactions and specific event or transaction response times.

There are common attributes of good response time definitions:

- Quantitative-Times should be expressed in terms so that a rational comparison can be made.

- Measurable-Times can be measured using a tool or stopwatch at reasonable cost.

- Relevant-Times are appropriate to duration of business processes. Market orders for a stock are far more time sensitive than a query on current account balance.

- Realistic-Sub-second response times for everything are neither possible nor realistic. Some system functions might take minutes to complete, especially large background queries, etc.

- Achievable-There is little point in agreeing to response times that are clearly unachievable for a reasonable cost.

Ideally, response times would be specified in increments and would also be relative to a level of activity (load). For example:

Under normal (average) load conditions (as defined in the operational profile) the following page download times can be expected:

- Average <5.0 seconds

- 90 percent <10.0 seconds (90 percent of the time we will be below this)

- 95 percent < 12.5 seconds (95 percent of the time we will be below this)

If the type of test is a sustained usage profile, a fixed number of users or events per time period, then we would not expect to see much variation above the average response time. If we are testing using profiles such as a peak hour or a load variation or bounce test we can expect to hit the 90 to 95 percent ceilings on a regular basis and possibly exceed them frequently.

I find it is not a good idea to go beyond the ninety-fifth percentile when defining response times. The higher the percentage, the more likely the system will exceed the specified maximum, especially during high volume, bounce types of load testing. This could give the appearance the system is unacceptable when in fact we simply promised too much.

Promising a level of performance of 100 percent is always a bad idea. The nature of systems and applications make this guarantee an almost certain recipe for failure.

There are other elements that may require response time requirements. Elements that constitute a screen or page can affect the acceptability of an application or system. The end-to-end times may be fine, but the user may still be unsatisfied. For example:

- Less than 0.1 seconds

- Button clicks

- Client-side drop downs

- Less than one second

- Java applet execution started

- Screen navigation started

- Less than ten seconds

- Screen navigation completed

- Search results presented

- Less than one minute

- Java applet download completed

In Jakob Nielsen's books Usability Usability Engineering and Designing Web Usability, he states, "the basic advice regarding response times has been about the same for almost thirty years."

Understanding the characteristics of load and defining it properly takes a lot of time and effort. If we are to create an accurate test and have our measurements be meaningful, we need to focus on this critical aspect of the performance test design. Measurements are complex and difficult to define and will be affected by the accuracy to the load profiles we use.

Once these activities are complete, we will be ready to look at tools and proceed towards execution of the tests and reporting the results, which will be addressed in the final part of the series.

Read "Understanding Software Performance Testing" Part 1 of 4.

Read "Understanding Software Performance Testing" Part 2 of 4.

Read "Understanding Software Performance Testing" Part 3 of 4.

Read "Understanding Software Performance Testing" Part 4 of 4.