When it comes to load testing in the cloud, going bigger is not always better. This article describes how organizations can utilize load testing to help right-size a production system being built in the cloud, allowing their project teams to build an ideal system that is not too small and under-performing for their needs and not too large and costly.

When application development teams build an application on physical hardware, initial hardware costs are generally a one-time consideration. They size their systems to support periods of peak load and some predetermined amount of buffer.

Physical systems are usually built oversized in relation to expected peak loads to mitigate the risk that a bad sizing calculation or false assumption has been made. It is more favorable to have a system that is somewhat oversized than one that is somewhat undersized because expected growth should be taken into account. We don’t build infrastructure just to support today’s loads; we make an educated guess as to how much our user base will grow in one, two, three years and beyond, and we build a system to support that growth.

As we know in technology, processing capacity correlates positively with cost, and the greater the processing capacity of the hardware in question, the larger the cost. We make an initial outlay for our servers, network equipment, and other hardware; we build out our environment; install, test, and configure our software; and deploy the finished product to production. With physical hardware, once we have made the initial purchase of hardware and deployed our system to the target environment, the ongoing cost for the infrastructure is comparatively low.

External cloud implementations offer an inverse cost structure compared to physical implementations. In the cloud, initial hardware investment is minimal, while ongoing (generally monthly) costs accrue based on the size and capability of the machine types utilized. The more capacity you purchase from the cloud provider, the higher the monthly cost. Regardless of whether you utilize that capacity, you are still paying for the availability of that capacity. This availability cost adds additional complicating factors to sizing and performance decisions. Performing properly targeted load testing allows a company to right-size its cloud installations, balancing ongoing costs with performance requirements and guiding decisions, which will have a direct impact on the cost of a system’s cloud footprint.

Let’s look at a basic example to illustrate two different approaches to building an application in the cloud.

The first approach is to build the system on the largest cloud instances available without regard to ongoing cost. This is a tempting solution, particularly when an organization is under pressure to deliver a project in a compressed timeframe, which is usually the case with application development projects. Teams under extreme pressure from their IT leaders to deliver something of value to production look for ways to reduce delivery times without adding risk to their projects. Machine instance sizes are selected not because of a thoughtful consideration of sizing needs, but because the developers and architects are certain performance issues will not be a problem on larger instances. Because the initial costs associated with spinning up large machine instance in the cloud are either the same or only marginally more expensive than spinning up a comparatively smaller instance, the project team implements an oversized infrastructure in the cloud for very little initial cost. Whereas on physical hardware the cost difference between a small server and large server can amount to tens of thousands of dollars up front, the cost to spin up a large cloud instance can be almost nothing. So, larger instances are utilized, load testing is either ignored or is reduced to save delivery time, and the application performs acceptably on the chosen infrastructure. This approach, however, causes a number of issues soon after the application is deployed to production.

First, this “build it big” method of developing for the cloud encourages teams to build flabby solutions without consideration for performance or load handling. Why bother tuning an application for performance when a developer can simply choose the largest available machine type and have it run acceptably? This code, however, will invariably be inefficient, creating a “lock-in” effect—the code now must reside on larger, more expensive instances because it can’t run efficiently on more moderately sized instances. The only way now to reduce the cost of the underlying cloud infrastructure is to go back and refactor the code for performance, another project (and an additional set of costs) in and of itself.

Second, this method of developing for the cloud backloads the cost of a project and obscures the true cost of the application until it is too late to easily do anything about it. Because initial costs to deploy the application into the cloud are low, the project may initially appear to be a success. Only after the first bill from the cloud provider comes due does the business realize what has occurred. By this point, the application is operating in production, and any attempt to downsize the infrastructure becomes another project of its own. Unless the organization has a very mature DevOps team that can seamlessly move application servers and databases from one cloud machine to another, the organization will soon find that downsizing servers in the cloud can be as difficult as migrating from one physical machine to another in a data center—a time-consuming and expensive process, indeed.

In addition, this method of developing for the cloud does not guarantee the application can scale appropriately in the future. A team supporting an application that already resides on very large instances may find that the only way to scale up is to add additional and expensive large instances, compounding the costs of the infrastructure very quickly.

The second approach to building in the cloud is one in which due consideration is given for the load-handling and performance capabilities and requirements of the system to be built. This approach does involve some investment of time to define performance requirements and to perform nonfunctional testing, but the resulting system will be much more efficient with resources, more scalable, and less expensive to maintain.

Building a cloud-based application using the largest available machine types may meet the application’s performance requirements initially, but it will likely result in a waste of resources because the infrastructure has much more computing power than it actually needs to perform effectively. This capacity goes unused, but the business must still pay for the capacity purchased, not the capacity utilized. Understanding the capacity needs of the application up front will guide the decision of which machine sizes to utilize and save the company money from the beginning of the project through its entire useful lifetime.

Let’s take a look at how a team can use load testing to match performance needs with cloud capacity to select the right cloud servers from the start.

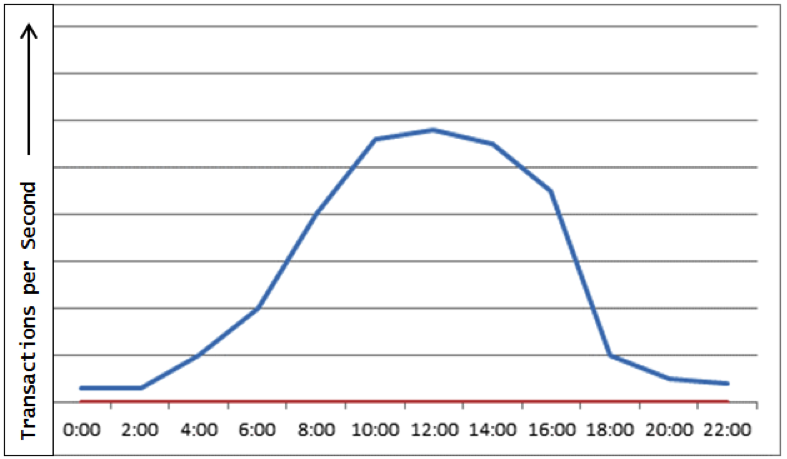

We start by understanding our system’s likely load profile, or how much work (users, data, files, or however your system defines “work”) it will handle over a period of time. Figure 1 represents a theoretical load profile across a twenty-four-hour period for a sample system. Like most, our example system experiences a low period and a peak period each day.

Figure 1: This sample load model for our example system shows high and low processing periods across twenty-four hours. These periods can be derived from existing data on similar systems or can be estimated based on the expectations of the business.

We generate a repeatable set of load test scenarios and run these tests multiple times using the same test cases and the same test data. These repeatable tests allow us to apply an increasing level of load on the application as we monitor the response time and system resources, such as CPU, memory, and network utilization, as well as disk input and output.

At any load level, the system must pass all of the following criteria:

- Transaction response time remains below 500 milliseconds

- CPU utilization on every machine remains below 60 percent

- Memory utilization on every machine remains below 70 percent

- No swapping to disk occurs

- Disk latency remains below 100 milliseconds on all drives

Once a single criterion fails, the test is stopped and the throughput (in transactions per second) is noted at this point. As we utilize larger and larger machine types, we expect our throughput to increase.

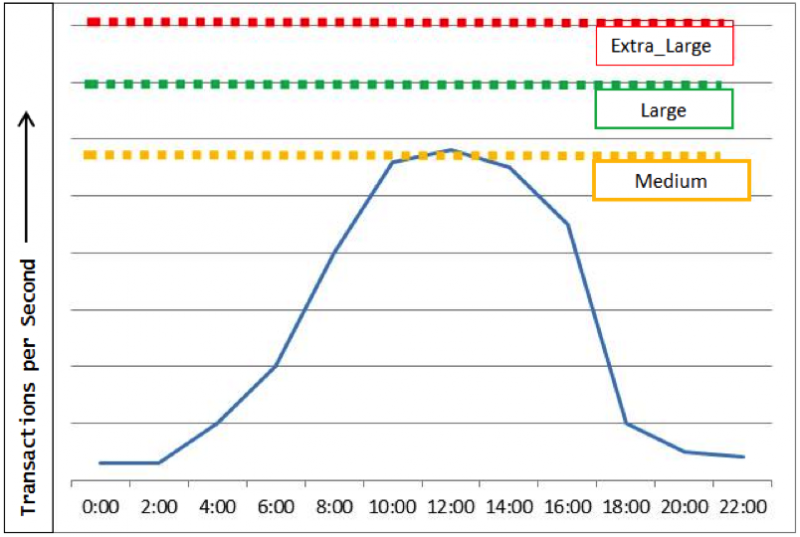

By load testing our system on three different cloud machine types, we measure and graph the system’s throughput in figure 2. The red, green, and yellow lines represent the measured throughputs of our system installed on fictional machine types “Extra_Large,” “Large,” and “Medium,” respectively. We overlay the throughput lines over our expected daily load profile to get the combined graph shown.

Figure 2: Three different peak load-handling capabilities using medium, large, and extra-large cloud instances, as measured through load testing, are shown. Picking the correct instance size will have a major impact on the monthly cost of the cloud infrastructure, as well as the system’s ability to handle its workload.

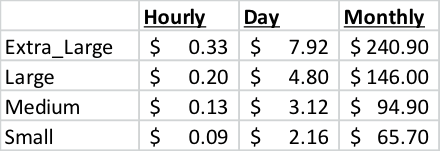

Table 1: Theoretical monthly pricing for cloud instance sizes

Once you have this combined graph in place, knowing which of these three machine types to utilize depends on your performance requirements. At first glance, Large appears to be the right option. Going to a larger machine instance, such as Extra_Large, may result in better performance, but at additional cost (approximately ninety-five dollars a month more for each machine). If performance requirements can be relaxed somewhat such that slightly slower response times are acceptable, or if work can safely be queued for a short period, then moving down to Medium instances may be appropriate as long as the resource utilization (CPU, memory, etc.) is acceptable. Medium instances save an additional fifty-two dollars a month per machine over Large machines. This solution would not be appropriate for transactions that need to be processed immediately, such as serving dynamic web pages or performing real-time queries. But for workloads where subsecond responses are not critical, the savings may be substantial.

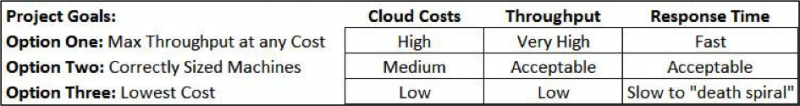

Table 2: Project goals will drive the cost, throughput, and response times of the cloud system. Achieving a balance among the three will contribute to the project’s success and long-term viability.

The key idea here is to select neither the smallest machine types possible nor the largest machine types, but to select the appropriate size (and cost) of machines to obtain the necessary required performance.

While we usually live in the domain of traditional performance indicators such as CPU utilization, throughput, and scalability, performance testers can add significant value to their cloud projects by adding cost as another factor in their test results. This allows project managers and the business to understand the cost-versus-performance comparison of different machine types, allowing teams to make more informed decisions that will impact the ongoing cost of the application once it is in production.

User Comments

The article "Load Testing and Sizing Considerations for the Cloud" provides valuable insights into the importance of load testing and proper sizing of Cloud based testing services. The article highlights the benefits of cloud computing, including scalability, flexibility, and cost savings, but also emphasizes the importance of properly sizing cloud resources to ensure optimal performance and user experience. The article also provides practical tips and best practices for load testing and sizing cloud resources, including selecting the right load testing tools and methods, identifying key performance metrics, and monitoring and analyzing performance data. Overall, this article is a must-read for organizations that are considering migrating to the cloud or that are looking to optimize their existing cloud infrastructure. It provides useful guidance and recommendations that can help organizations ensure that their cloud resources are properly sized and optimized for their specific needs and requirements.