Many of us keep asking: If the benefits of automated testing are so vast, why does test automation fail so often? Artem Nahornyy addresses this common dilemma.

The dynamically changing IT industry brings forth new objectives and new perspectives for automated testing in areas that were brought to life in the recent decade, such as cloud-based, SaaS applications, e-commerce, and so on. The past five years saw an immense growth in the number of agile and Scrum projects. Additionally, the IT market has changed significantly, not only with various new tools (e.g., Selenium 2, Watir WebDriver, BrowserMob, and Robot Framework) but also with approaches that have also completely changed. For example, more focus has been placed on cloud-based test automation solutions both for performance testing and functional testing. Cloud-based testing of web applications is now replacing "classic," local deployments of testing tools.

Even though there are a vast number of benefits to automated testing, test automation can often fail. Some types of mistakes in test automation may include selecting the wrong automated tool, incorrectly using the tool, or setting the wrong time for test creation. It is worth paying special attention to the test automation framework and proper work scope division between the test automation and manual testing teams. The "Test Cases Selection" section of this article highlights many reasons why we must not automate certain test cases.

Let’s take a closer look at the five most common mistakes of test automation in agile and their possible solutions.

1. Wrong Tool Selection

Even though the popular tool may contain a commendably rich feature set and it's price may be affordable, the tool could have hidden problems that are not obvious at first glance. For example, there may be problems like insufficient support for the product and a lack of reliability. This occurs in both commercial and open source tools.

Solution

When selecting a commercial test automation tool for a specific project, it is not enough to only consider the tool’s features and price; it's best to analyze feedback and recommendations from people who have successfully used the tool on real projects. When selecting an open source freeware tool, the first thing to consider is the community support, because these tools are supported by their community only and not by a vendor. The chances to correct arising issues with the tool are much higher if the community is strong. Looking at the number of posts in forums and blogs throughout the web is a good way to assess the actual size of the community. A couple good examples include stackoverflow.com, answers.launchpad.net, www.qaautomation.net, and many other test automation forums and blogs.

In order to understand whether a test automation tool was selected properly, you should begin with answering a few questions:

- Is your tool compatible with the application environment, technologies, and interfaces?

- What is the cost of your chosen test automation tool?

- How easy it is to write, execute, and maintain test scripts?

- Is it possible to extend the tool with additional components?

- How fast can a person learn the scripting language used by the tool?

- Is your vendor ready to resolve tool-related issues? Is the community support strong enough?

- How reliable is your test automation tool?

Answering these questions will provide a clear picture of the situation and may help you to decide whether the advantages of this tool's usage outweigh the possible disadvantages.

2. Starting at the Wrong Time

It is a common mistake to begin test automation development too early, because the benefits almost never justify the losses of efforts for redevelopment of test automation scripts after the functionality of the application changes until the end of iteration. This is a particularly serious issue for graphical user interface (GUI) test automation, because it is much more likely that GUI automation scripts will be broken by development than any other types of automated tests, including like unit tests, performance tests, and API tests. Unfortunately, even after finishing the design phase you may still not know all the necessary technical details of the implementation, because the actual realization of the design selected could be achieved in a number of different ways. For GUI tests, technical details of the implementation always matter. Starting automation early may result in spending repeatable and meaningless efforts on redevelopment of the automated tests.

Solution

During the development phase, members of a quality assurance (QA) team should spend more time creating detailed manual test cases suitable for the test automation. If the manual test cases are detailed enough, they can be automated successfully after completion of the given feature. Of course, it's not a bad idea to write automated tests earlier, but only in cases where you are 100 percent confident that further development within the current iteration will not disrupt your new tests.

3. Framework

Do you know what’s wrong with the traditional agile workflow? It seems to not encourage the inclusion of test automation framework development tasks, because they have zero user points. But, it’s not a secret that any good and effective test automation requires both tools and framework. Even if you have already spent several thousands of dollars on a test automation tool, you still need a framework to be developed by your test automation engineers. Test automation framework should always be considered, and its development never underestimated. How does this fit into the agile process? Pretty easily, actually, and it's not as incompatible as it may seem.

Solution

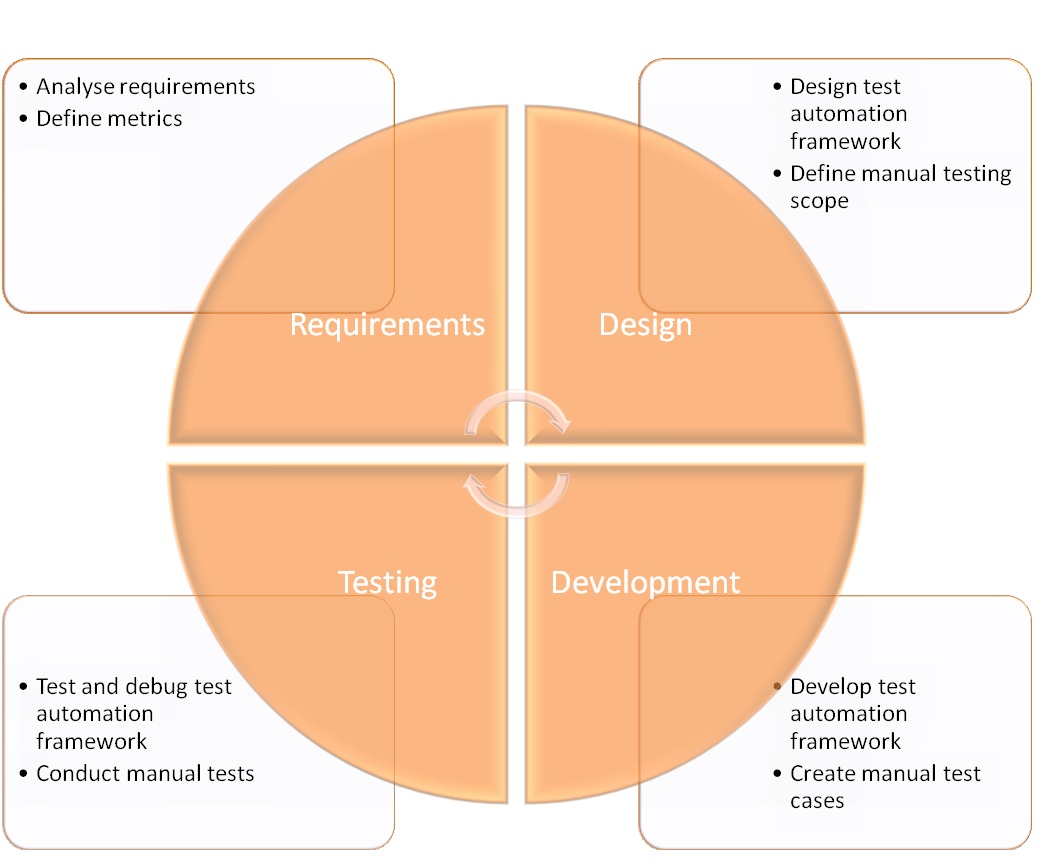

How much time would you need to develop a test automation framework? In most cases it will take no longer than two weeks, which equals the usual agile iteration. Thus, the solution is for you to develop the test automation framework in the very first iteration. You are probably wondering if that means that the product will remain untested, but that is not the case, because it could be tested during the duration of that period. The workload increase on manual testers is probably unavoidable, but there is not much testing done during the first iterations—developers are more focused on backend development, usually covered by unit tests—so the process balances itself. The very first iteration will look like this: Start with both analyzing requirements and designing the test automation framework during the design phase; then develop, debug, and test it until the end of iteration.

|

| Figure 1: Development of test automation framework during the first iteration, focusing on manual testing |

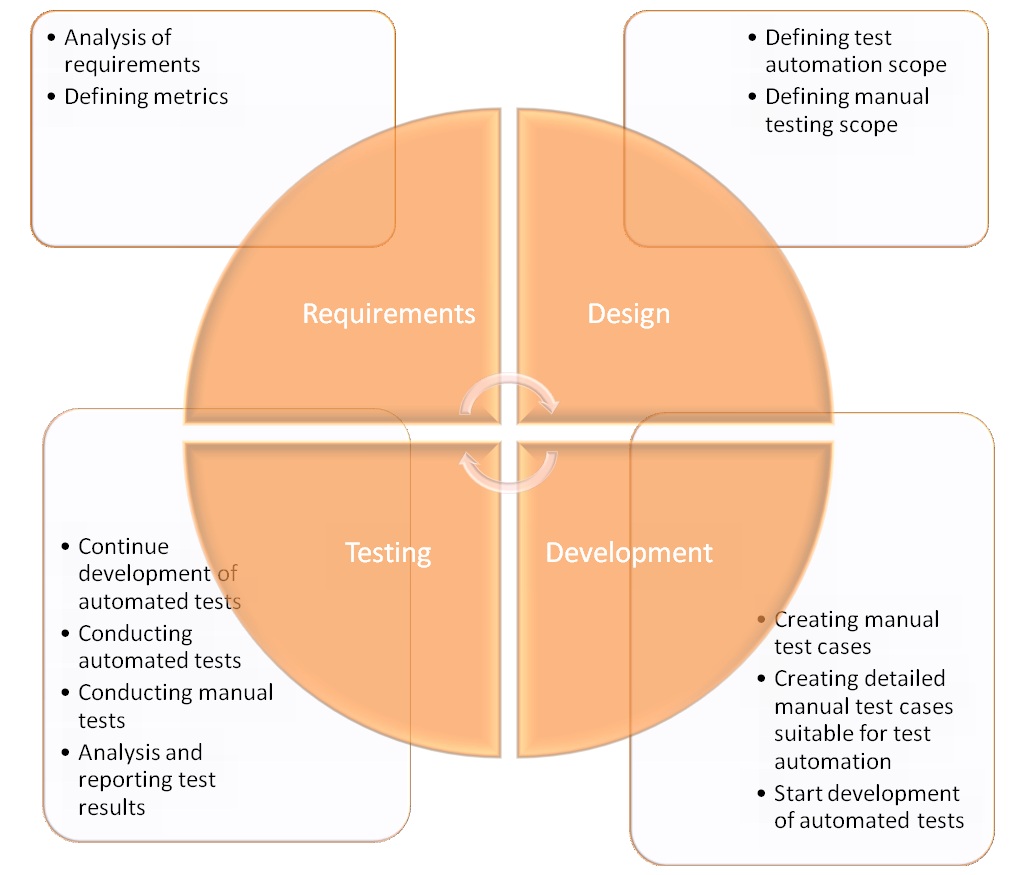

The next iteration is back to normal:

|

Figure 2: Test automation workflow during the next iterations |

4. Test Cases Selection

How do we select test cases for automation? That’s an interesting question and grounds for another common mistake—trying to automate all test cases. But "automate them all" is hardly an answer if you are focused on quality and efficiency. Following this principle leads to useless efforts and money spent on test automation without bringing any real value to the product.

Solution

There are certain cases where it's better to automate and some cases where it doesn't make much sense to do so. It is the latter that always has the higher priority. You should perform automation when: your test case is executed frequently enough and takes time to run manually, you have a test that will run with different sets of data, or your test case needs to be run under many different platforms and system configurations.

On the other hand, test automation cannot be used for usability testing and when the functionality of the application changes frequently, when the expenditures on test automation tools and the support of already existing tests are too high, or when test automation doesn't provide enough advantages if compared to manual testing.

5. Test Automation vs. Manual Automation

A lack of coordination between your automated testing and manual testing subteams is another common mistake. This can lead to excessive efforts spent on testing and bad quality software. Why does this happen so often? In most cases, manual testing teams may not have enough technical skills to review automated test cases, so they prefer to hand-off this responsibility to the automated testing teams. This causes a different set of problems, including:

- The test automation scripts are not testing what they should, and in the worst case scenario, they are testing something that is not even close to the requirements.

- To ensure a successful test, test automation engineers can change test automation scripts to ignore certain verifications.

- Automated tests can become unsynchronized with the manual test cases.

- Some parts of the application under the test receive double coverage, while others are not covered at all.

Solution

In order to avoid these problems, it’s best to keep your whole QA team centralized and solid. The automated testing subteam should obtain the requirements from the same place as the manual testing subteam. The same list of test cases should be kept and supported for both subteams. Automated test cases should be presented in a format that is easy to understand for non-technical staff. There are many ways to achieve this, including using human-readable scenarios, keyword-driven frameworks, or just keeping the code clean while providing sufficient comments.

Conclusion

I have listed only the most common mistakes that could affect the efficiency of test automation for your project, resulting in its poor quality. It’s wise to pay more attention to the test automation activities, and to consider them an integral part of the quality assurance process of your project. If you take test automation seriously, you will be able to avoid most of the above-mentioned mistakes.

User Comments

Most Agile testing literature recommends that user stories are to be automated within the same iteration. I believe I've seen one post that actually bent to reality and suggested that automation can occur in the iteration following the user story's implementation. This article now states that some user stories should NOT be automated, or at least not automated until the application is close to its final form.

This article sounds like test automation in a waterfall shop, where testers are at the bottom of the heap, rather than at an Agile shop. See http://manage.techwell.com/articles/weekly/three-amigos for an alternative approach.

1. Wrong tool selection: Yes, but not for the reasons you state. Usually it's wrong because it can't be used by anyone, due to high license costs, and doesn't support writing the tests before implementation.

2. Starting at the wrong time: The mistake is starting too late, not too early. Testing starts while discussing the requirements.

3. Framework: No tasks have story points. Test frameworks, like application frameworks, should be "grown" to support current needs, not developed ahead of time.

4. Test Case Selection: No, not all tests need to be automated, but most orgs automate too few, delaying crucial feedback about the product.

5. Test Automation vs. Manual Automation: I'm not sure what "manual automation" is, but I see a problem is you're isolating your testers into multiple subteams. Put the entire development team together and they can work out what is best automated and what is best explored manually.

I'd like to write a longer comment, but why bother? You covered all the basics, just fine. :)

Excellent article, it was so much useful and you had anayzed on utmost key factors.

Interesting article with some fresh perspectives.

I wish to add a point to '4. Test Case Selection'

- To increase the value proposition of Automation, start with automation of use cases, sorted by priority; This sometimes may mean a disconnect between test cases and automation scripts; and fixing test cases to map to scripts is better here; automation set should grow from here like an organic growth; in surrounding areas of automated case. You can adopt this strategy at any time i.e. even if you have large number of legacy Test cases.

Please share your comments on this.

I would like to see your definition of framework.

In my vocabulary, I have spent more than a year developing framework, which includes: Wrapping Selenium's functions, Managing Properties, Handling JSON, HTTP GET/POST, HTTPS GET/POST, SSH, Curl, PGP, PHP Serialization, Random Numbers, Random Strings [Unicode, Ascii, Alpha-numeric, etc.], Database queries, DB Functions, DB Stored Procedures, XML parsing, custom Lists, custom Maps, and an array of other pieces. I don't see how this could conceivably be done in a single sprint, even assuming you could use all off the shelf modules that all happened to work the first time. Even just designing the framework took multiple iterations and was not cleanly done in a 'sprint' like fashion. So maybe when you say "framework" you just mean getting a project setup with whatever tool you use to interact with the system under test?

- JCD

AHA! I should have looked at the company the author worked for before I commented. Wouldn't use them come hell or high water. Yes I HAVE worked with them, that's how I know (better).

I have been in practical situation of following agile and agree with Author's comments on selection of test cases to ensure adequate coverage on regression and optimized cycle time for execution. If all test cases from sprint cycle are automated, automated tests (especially UI flows) grow too big enough that it will take too long to run one complete cycle of execution for a change introduced late in the cycle. it is typically 20% of workflows that are used by busness 80% of the time and they are most critical - automation should target to provide 100% coverage on those 20% frequently used, critical workflows, so those are not broken in a new release.

Most organization do automation for fancy and just put too many resources to target 100% coverage on all test cases, still have critical bugs slipping through to production. That is because, they don't pay attention to 'what part of the product functionality' is covered in automation. Most of the times, in amature product, vast majority functionality are not used by many customers OR they are not critical functionality for business - automating them and running them every sprint cycle is waste of money, effort and time for the organization. Instead, focus on business critical, frequenntly used functionalities for automation coverage - In fact, no matter how much you cover in automation, there will be issues as business uses it in many different ways - but, as long as issues found in production are not show stoppers, I think business goals are met, exceeding expectations.

If yoy automate everything you test in sprint for the first time, your execution cycle will take weeks and months, even if automated, as the product functionality grow.

Automating everything works for smaller products with 1-2 clients using - not for enterprise products and huge customer base - thats my 2 cents!