When a suite of automated tests takes days to run from start to finish, there is value in adding versatility. With the right structure implemented, an automated test suite can be flexible enough to allow a user to dynamically change the tests executed every time the suite is run. Rebecca goes through the design steps of creating an interface-driven tool that gives test execution versatility.

Automated software testing is not for the faint of heart. It requires skill, patience and above all, organization. Organizing a suite of automated tests can be done in a number of different ways. Most tool providers offer methods of organization and execution for scripts. These methods often involve scheduling scripts to run in order, a kind of a batch file execution. These are all well and good for many applications, but offer little versatility when it comes to running only part of a script or test sequence. When it comes to automated test versatility, it may be necessary to design a front end to a test suite that can offer the user an interface driven test selection tool.

Repetitive Tests

Repetitive test cases are common in software testing. It is a drag having to go through hundreds of test cases that are mundane and redundant. But, they offer great test coverage. They also tend to be cut back under tight schedules. When dealing with a repetitive test, if ten of the one hundred trials pass, risk analysis tells us that all the tests will probably pass. These types of tests are great candidates for test automation. Automation can speed up the process of mundane testing while freeing up a tester for more thought provoking and defect sleuthing tests such as exploratory or free form.

There are all types of applications that require some type of repetitive test. Usually any test that has to be run over and over with different parameters set is easily automated with just a few functions. Tests that require different communication settings, display settings, environment settings, etc. are examples of these types of tests. Here are some examples of test specs for these test cases:

Run DisplayTest_1 at 800x600

Run DisplayTest_1 at 1024x768

Run DisplayTest_1 at 1280x1024

Run DisplayTest_1 at 1600x1200

Run DisplayTest_2 at 800x600

Run DisplayTest_2 at 1024x768

And so on.

Run CommunicationTest_1 at 56K

Run CommunicationTest_1 at 9600

Run CommunicationTest_1 at 4800

Run CommunicationTest_2 at 56K

Run CommunicationTest_2 at 9600

And so on.

>

Breaking Down the Test Script

There are different methods for automating such tests. One is the record/playback method that leads to a very long script that can take days to run. It would systematically execute code to set the environment, and code to execute the test, alternating between these until finished with all test cases. This method requires a lot of maintenance and is lacking in error recovery and versatility. Breaking the script down into modular functions makes the code easier to maintain. The above test cases would require a unique function for each test: Test_1, Test_2, etc. There would also need to be a function to set the display resolution for the first set of tests and a function to set the baud rate for the second set. Each of these functions should return a value indicating whether the function was successful and leave the system in a known state. Rather than a long succession of executable lines of code, repeating lines are consolidated into a single function and our automated test becomes a set of function calls. The body of the test would look something like this:

Call Function SetDisplay (800x600);

Call Function RunDisplayTest_1();

Call Function SetDisplay (1024x768);

Call Function RunDisplayTest_1();

And so on….

Call Function SetBaudRate (56K);

Call Function RunComTest_1();

Call Function SetBaudRate (9600);

Call Function RunComTest_1();

And so on….

This makes the script much shorter and more modular. If something must change in the code for running one of the tests, it only has to be changed in one place rather than multiple times throughout the script. Progressing further with this idea of minimizing the length of the script and reusing code, we can abstract out another layer of function calls. One more level of modularity will greatly increase the scripts versatility.

In the above example, there is still a repeating pattern in the code. The functions SetDisplay and RunDisplayTest are called alternatively. This method is useful for running through every test, but not for running through a sample of tests. It is also not convenient for changing the order of the tests executed. These changes could be made by going into the script and changing code, but a user can more easily change the order of execution if the script is abstracted out one more layer.

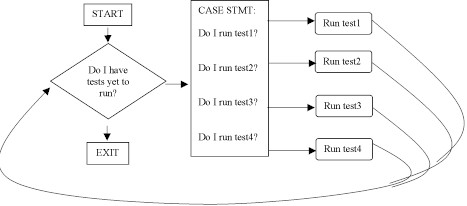

Here is a program flow dictating how this would look logically:

Developing a Data Driven Script

It is a common practice to have outside data imported into a script from a parsed data file of some sort. In a program such as the one above, the data file can actually serve as a sort of a tool driver. At the beginning of a script such as the one illustrated above, a test list can be created every time the script is run. This data file can drive the script to know how many times to loop through the case statement, which contains all test case function calls.

This discussion of a data file driven test script is implemented by creating a linked list. This implementation is simple, requires a short main body and is flexible in it's implementation, allowing it to be used in all kids of test applications. One structure can support any set of tests.

Using the test cases above, an example of a data file:

DisplayTest_1, 800x600, 1

DisplayTest_1, 1024x768, 1

DisplayTest_1, 1280x1024, 1

DisplayTest_1, 1600x1200, 1

DisplayTest_2, 800x600, 1

DisplayTest_2, 1024x768, 1

CommunicationTest_1, 56K, 1

CommunicationTest_1, 9600, 1

CommunicationTest_1, 4800, 1

CommunicationTest_2, 56K, 1

CommunicationTest_2, 9600, 1

Tests listed in the order they are to be executed are separated by a return. The parameters in which they are to be run are separated by commas, with a final entry on the line indicating the number of times the test is to be run. This last element is optional, but very useful for repeating tests. Some tests reveal bugs when they are run more than once. Implementing a loop variable for each test allows for that kind of flexibility in selecting tests to be run repeatedly.

Some applications will have tests that support more than one parameter. Not all tests will require the same number of parameters. In such a case, the data file should be set up to include the maximum number of parameters that any test may need. All other tests should be entered with blank entries for parameters that aren't needed. For example, another test may be created in this suite which has no parameters. Let's assume there is a test called Desktop() that checks for Desktop corruption, regardless of resolution or baud rate. Adding it to the data file would look like this:

DisplayTest_1, 800x600, 1

DisplayTest_1, 1024x768, 1

DisplayTest_1, 1280x1024, 1

DisplayTest_1, 1600x1200, 1

DisplayTest_2, 800x600, 1

DisplayTest_2, 1024x768, 1

Desktop, ,1

CommunicationTest_1, 56K, 1

CommunicationTest_1, 9600, 1

CommunicationTest_1, 4800, 1

CommunicationTest_2, 56K, 1

CommunicationTest_2, 9600, 1

Note the empty parameter space for the Desktop test. Maintaining a uniform parsed list of tests is important when implementing a linked list. Uniformity in the early stages of test development will be important for creating a test structure that is flexible enough to run any test in any order.

Reading Data Into a Linked List

Using a parsed data file, the main body of a script can read the list of tests into a linked list. Each item in the linked list represents a test and contains information on each parameter needed for the test execution.

Define a Test to contain a test name, a setting and a loop variable. The type definition for this example looks like this:

typedef struct test{

char test[20];

char setting1[20];

int loop;

struct test *next;

} TEST, *pTest;

The script can create a linked list of the test structure as it reads data from the parsed file.

Here is a representation of what the linked list will look like given the data file above:

The fact that the linked list can vary in length makes it a very versatile tool for creating flexible test execution in scripts. This linked list could read a one line test data file and create a looping test that executes 20 times in a row or read in a one hundred line test data file and drive a script to execute 100 different tests in whatever order they are selected.

Running the Tests

In the flowchart above, there is a block that contains the question, Do I have tests yet to run? this block in the flow of the program will be implemented using a loop. A loop that executes one time for every item in the linked list can execute a function call depending on the function named in the linked list. The loop could look something like this:

For(I=0;I<num;i++)>

{

if(strcmp(linkPtr->test, "DisplayTest_1")==0)

call DisplayTest_1(linkPtr->setting1,linkPtr->loop);

if(strcmp(linkPtr->test, "DisplayTest_2")==0)

call DisplayTest_2(linkPtr->setting1,linkPtr->loop);

…more if statements for each test.

linkPtr=linkPtr->next;

}

This loop contains a case statement for each test that can be executed. Each Test's function call contains two parameters. One is called 'setting1' and one is called 'loop'. Each function receives these parameters when called and can implement the functions that set the parameters as it executes. Then it is imperative that each function returns the system to a known state. This known state is the condition the system is expected to be in every time a function is started. After the function is complete and the system is returned to the known state, the main script will loop through the above loop again, continuing to loop until it reaches the end of the linked list.

Given this structure the tests may be run in order as listed above. In a case where it's discovered that DisplayTest_1 sometimes corrupts the desktop. The next time the test is run, the tests can be run in a different order such as:

DisplayTest_1, 1600x1200, 1

Desktop, ,1

DisplayTest_2, 800x600, 1

Desktop, ,1

DisplayTest_2, 1024x768, 1

Desktop, ,1

Perhaps CommunicationTest_2 occasionally finds issues in the 56K baud rate. Then a test could be quickly set up to loop through the test with a data file like this one:

CommunicationsTest_2, 56K, 20

Test Manager DLL

The modularity described so far is only as useful as it is easy to use. Making changes to the data file quickly and easily is important. It is also important that the changes be easy for a user to implement. If a user trying to execute a test has to go into code to make changes, it isn't easy. Navigating a data file is best done with a sleek user interface.

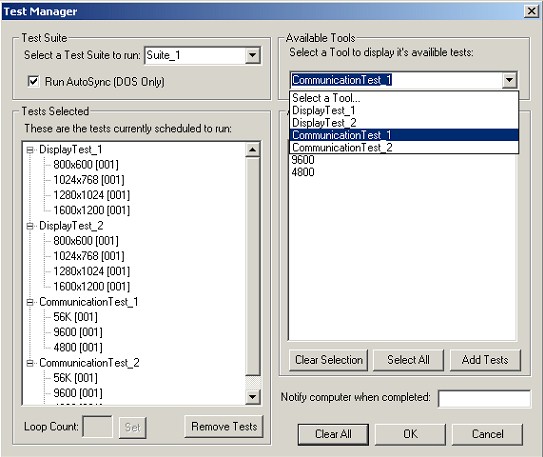

Creating a separate dll or script that can be called by the main script to create a data file for the program to read is one way to provide an interface. The interface can be created in Visual Basic, C++, or any number of languages. The interface dll can read in a set list of tests and then create an environment in which the tests can be rearranged or their parameters changed. Here is an example of an interface:

This example interface populates dropdown lists with tests that can be selected to run. When a test is selected, all parameter options are listed in the list box and can be selected and added to the list of tests to be run. Once the user has created a set of tests in the desired order and clicks OK, the data file can be generated. The file can then be read as described earlier and a linked list can be created for executing the tests.

The data file can serve a dual purpose as a test driver and also as a test record. Once the data file is saved it can be kept as a record of the tests scheduled to run. It can be edited to include PASS or FAIL at the end of each test line. This format is easily read when imported into a spreadsheet such as Microsoft Excel.

Other Feature

When implementing an interface for tests, there are a lot of features that can be added for each project or suite of tests, as needed. In the above interface, there is a checkbox for synchronizing the system. There is also an edit box where a user can enter their computer name and have a message sent to their computer when the automated test completes. User names and passwords could be features added to a test interface, as well as versions of software being tested. This can assist in logging information automatically. Some scripting tools offer these types of features. Some test authors aren't using structured tools and some tools' features can be expanded upon with a module design of the type that is described in this article.

Foundation of Test Design

There are all sorts of applications for this type of automated test design, but these designs have to be built on a firm foundation. Authoring functions so they can be used repeatedly, and designing them to leave the system in a known state is essential. It helps immensely to have error handling built into these functions so that if one test encounters an error, test execution can continue unhampered.

Questions can arise in the design of automated testing such as, should parameters be set before entering the test function or inside the test function? These answers are dependant on the test being automated. If the test function needs to know the parameters, then they should probably be set internal to the function that executes the test. If the test is a dummy function that doesn't need to know what the resolution or baud rate is, it's possible to set these parameters before entering the function that executes the test.

These types of structural issues will be answered as the automation is implemented. The really nice thing about a modular design like the one above is that new tests can be added at any time as long as they are designed to fit in the defined 'test' structure. Endless tests can be added, but they don't have to be executed every time the script is run. They can be skipped and rearranged and that can be a big help later as schedules, regression requirements, and time constraints change. It also makes training new people on executing automated tests a lot easier.